The Rise of Industry-Specific LLMs: Why Generic AI Models Are No Longer Competitive for Enterprises

Executive Summary

The first phase of Generative AI adoption was dominated by general-purpose models trained on the public internet. That era is ending.

Enterprises in BFSI, healthcare, retail & logisitics, telecom, and manufacturing are now rejecting generic LLMs for a simple reason:

You cannot build differentiated enterprise intelligence using undifferentiated models.

Generic LLMs lack the domain context, regulatory grounding, and operational understanding required in high-stakes enterprise environments. As a result, industry-specific LLMs are emerging as one of the fastest-scaling GenAI shifts heading into 2026, as organizations move from experimentation to production-grade, outcome-driven AI.

1. Why Generic LLMs Are No Longer Enough for Enterprise Transformation

1. They lack regulatory context

BFSI models need:

- RBI, GDPR, PCI-DSS, Basel III corpora

- fraud patterns

- credit risk taxonomies

Healthcare models need HIPAA + medical ontologies.

Manufacturing models need equipment, safety, and supply chain ontologies.

Generic models can’t provide this.

2. They hallucinate in high-stakes decision environments

A generic LLM can invent, compliance rules, medical insights, financial interpretations

Enterprises cannot risk “creative reasoning” in regulated environments.

3. They cannot reason using enterprise knowledge

Enterprise knowledge lives in SOPs, policies, tickets, audits, product documentation, architecture diagrams, proprietary data models. Vertical LLMs learn these patterns.

4. They fail to integrate into existing enterprise workflows

Enterprise decisions require, deterministic reasoning, audit trails, explainability, chain-of-thought governance, multi-step decision flows.

Generic LLMs aren’t built for this level of control.

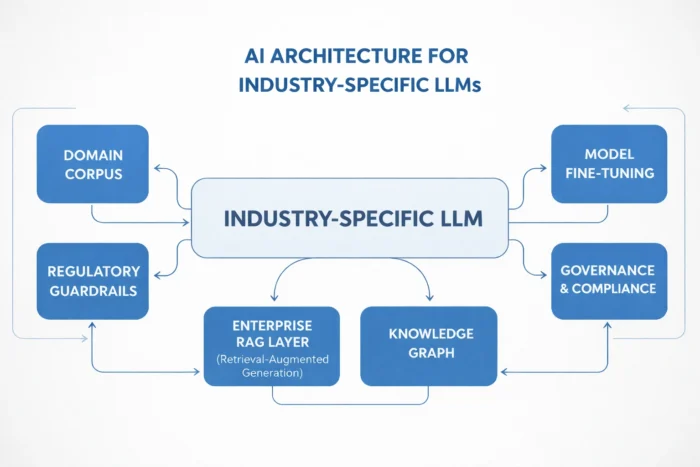

2. What Industry-Specific LLMs Actually Look Like

Industry models incorporate:

1. Domain-tuned corpora

Finance: risk scoring models, fraud networks

Healthcare: SNOMED, ICD codes

Retail: merchandising logic, customer behavior models

2. Regulatory guardrails

Models produce compliant-by-design responses.

3. Operational context

LLMs understand industry workflows.

4. Retrieval layers tied to enterprise knowledge

RAG becomes domain-aware, not keyword-based.

3. The Most Active Industries Adopting Vertical LLMs

BFSI

- Credit underwriting

- Fraud detection

- KYC/AML operations

- Regulatory reporting

- Customer dispute resolution

Healthcare

- Clinical documentation

- Medical coding

- Diagnostics support

- Patient communication

Retail & CPG

- Demand forecasting

- Dynamic pricing

- Customer experience automation

Telecom

- Network diagnostics

- Outage prediction

- Self-service activation

4. Why Industry-Specific LLMs Are Accelerating in 2026

What started as early adoption in 2025 is now becoming a strategic mandate in 2026.

1. Enterprises want differentiation, not generic chatbot functionality

An industry LLM becomes a strategic asset, not a commodity.

2. Fine-tuning costs have decreased dramatically

Enterprises can now tune models for:

- Specific languages

- Specific tasks

- Specific regulatory boundaries with far smaller data requirements.

3. RAG + Domain LLM = Enterprise Reasoning Engine

The real power lies not in the model itself, but in the fusion of domain data + structured reasoning.

5. PureSoftware POV: Building Industry LLMs That Understand Your Enterprise

In 2026, competitive advantage will not come from access to AI models, but from how deeply those models understand industry realities.

PureSoftware helps organizations develop industry-grade AI by providing:

- Domain-specific corpora curation

- Regulatory-aligned LLM governance

- Enterprise-tuned RAG pipelines

- Model guardrails and policy enforcement

- Data governance + AI security

- Multi-agent workflow orchestration

- Full-stack LLMOps for secure deployment

Conclusion

Industry-Specific LLMs now define the operating standard for enterprise AI in 2026.

Organizations that move early will create AI models that are:

- More accurate

- More compliant

- More defensible

- More aligned with their operating realities

And more importantly, impossible for competitors to replicate.

Frequently Asked Questions

-

Are domain LLMs more expensive?

Not necessarily, fine-tuning and RAG reduce training cost significantly. -

Are they more secure than generic models?

Yes, because data never leaves controlled boundaries and outputs respect regulatory constraints. -

Will generic LLMs become obsolete for enterprises?

Not obsolete but insufficient for high-stakes or domain-dependent workflows.